Every business today is racing to make its data usable by AI assistants like ChatGPT, Claude, or Perplexity. The problem? Your data already lives behind APIs that are too complex for non-developers to use. Training an LLM to speak your API’s language is error-prone, expensive, and often impossible at scale. This is where MCP servers (Model Context Protocol) step in.

They act as a bridge between your API and AI assistants: instead of teaching an LLM how to query your database, you expose a set of ready-made, structured tools.

Suddenly, natural language queries like:

Show me women’s shoes under 100 PLN available in the Polish channel

…turn into real, validated API calls — safely, securely, and without building a new UI or marketplace plugin.

If your company already uses GraphQL, you are closer to this future than you might think.

What Is Model Context Protocol (MCP)?

- MCP servers are the new extension surface for AI assistants. They let you expose your existing APIs as tools.

- No plugin marketplace friction. No extra UI. Just direct access for AI.

For e-commerce, SaaS, or any data-driven business, this means: product discovery, catalog browsing, and customer support become instantly AI-ready.

The Challenge: Making APIs Assistant-Friendly

Most APIs aren’t “assistant-friendly” out of the box. They require careful typing, validation, and simplification.

That’s why we combined the Saleor GraphQL API with FastMCP Cloud — the easiest way to host and share MCP servers — and proved how quickly AI assistants can start working with real product data.

Want to make your APIs AI-ready?

Our Solution: GraphQL + Ariadne Codegen + FastMCP

GraphQL is schema-first: your inputs and outputs are already explicitly typed. That’s exactly what an MCP server needs.

With Ariadne Codegen and FastMCP we can:

- Auto-generate Pydantic models from a GraphQL schema.

- Build a typed async client for queries and mutations.

- Reuse fragments for consistent data structures.

- Expose those queries as MCP tools with a simple decorator.

Result: You turn your GraphQL schema into an AI-ready MCP server in minutes.

Real Case Study: Saleor E-Commerce API

To prove this in practice, we built an MCP server on top of Saleor, an open-source e-commerce platform with a rich GraphQL API.

We focused on the Products query, which is both powerful and complex:

- Complicated inputs (filters, full-text search, ordering rules).

- Relay-style pagination, which most LLMs struggle with.

- A perfect AI use case: natural product discovery and catalog lookup.

Instead of teaching ChatGPT how to write cursor-based queries, we wrapped Saleor’s GraphQL with Ariadne Codegen + FastMCP. The assistant can now call typed, validated tools directly.

And to make it real, we used nimara.store, Mirumee’s demo Saleor storefront, as the test environment.

Implementation Walkthrough

Project Setup

Your pyproject.toml does most of the heavy lifting:

[project]

dependencies = ["fastmcp==2.12.2", "ariadne-codegen==0.15.2"]

[project.scripts]

mcp = "graphql_to_mcp.main:main"

[tool.ariadne-codegen]

remote_schema_url = "https://nimara-demo.eu.saleor.cloud/graphql/"

queries_path = "graphql_to_mcp/queries.graphql"

target_package_path = "graphql_to_mcp"

That’s it. Ariadne Codegen pulls down the schema, processes your queries, and spits out a fully typed client plus models.

Defining Queries and Fragments

Example queries.graphql:

fragment Product on Product {

id

name

slug

thumbnail {

url

}

pricing {

priceRange {

start { gross { amount currency } }

stop { gross { amount currency } }

}

}

}

query ListProducts($channel: String!, $where: ProductWhereInput, $sortBy: ProductOrder, $search: String, $after: String) {

products(channel: $channel, where: $where, sortBy: $sortBy, search: $search, first: 10, after: $after) {

edges { node { ...Product } }

pageInfo { hasNextPage endCursor }

}

}

query ProductById($id: ID!, $channel: String!) {

product(id: $id, channel: $channel) {

...Product

}

}By defining a Product fragment, we are getting a reusable product type that can be shared across multiple queries in the app. This keeps both the GraphQL and the generated Python code clean, consistent, and easy to extend.

Run codegen once, and you are ready to write the MCP layer.

Exposing GraphQL with FastMCP

The graphql_client module is generated using Ariadne Codegen. We reuse the where, sort, and search definitions from the GraphQL schema, and by defining the Product fragment, we also create the corresponding Product model. In addition, the client includes methods automatically generated from GraphQL.

from graphql_to_mcp.graphql_client.input_types import (

ProductOrder,

ProductWhereInput,

)

from graphql_to_mcp.graphql_client.enums import OrderDirection, ProductOrderField

from graphql_to_mcp.graphql_client.client import Client

client = Client(url="https://nimara-demo.eu.saleor.cloud/graphql/")

async def main():

await client.product_by_id(id="UHJvZHVjdDoyMjI=", channel="channel-us")

await client.list_products(

first=10,

channel="channel-us",

search: "Tshirt",

sort_by={

direction: OrderDirection.ASC,

field: ProductOrderField.PRICE

}

)FastMCP makes it easy to register tools. You can describe the input for the LLM in the comments using Annotated, and provide the overall tool description through a Python docstring:

@mcp.tool(

annotations={

"title": "Get product by ID",

"readOnlyHint": True,

"idempotentHint": True,

"openWorldHint": True,

}

)

async def get_product(

id: Annotated[str, "ID of a product"],

channel: Annotated[

str,

"""

Slug of a channel for which the data should be returned. This field is required.

If the user has not provided it, ask them which channel to use.

""",

],

) -> Product:

"""

Fetch a single product from Saleor by its ID and channel.

This tool retrieves product information such as: ID, name, slug, external reference,

product type, category, date of creation, date of last update, and pricing.

Products are channel-aware, meaning that their availability and pricing can vary

based on the specified channel.

"""

result = await client.product_by_id(id=id, channel=channel)

return result.productFor the complete example, check our Ariadne Codegen example repository on GitHub.

To run the service, use:

git clone https://github.com/mirumee/mcp-ariadne-codegen-example

cd mcp-ariadne-codegen-example

python -m venv .venv

source .venv/bin/activate

pip install -e .

mcpYou can test your MCP server directly in Postman. The Postman team has added first-class support for MCP requests, which makes it easy to call your endpoints, inspect request and response shapes, and validate everything before connecting an AI assistant. See the Postman MCP documentation for details.

Connect Your MCP Server to ChatGPT

You already have an MCP server, now let’s connect it to ChatGPT.

All we need is a public URL that the LLM can access. With FastMCP Cloud, this is straightforward: just push your code to a GitHub repo and register it with fastmcp.cloud. That is all it takes to deploy your server to the cloud.

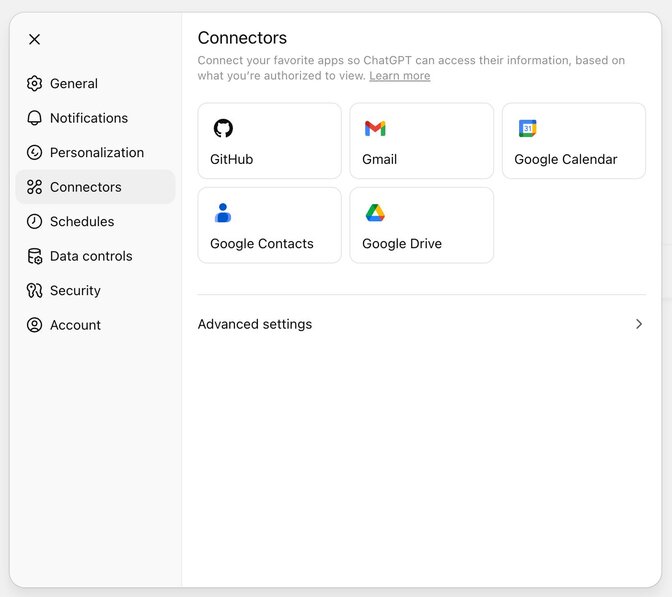

Once you have a working URL, you can connect it in ChatGPT:

- Open ChatGPT settings Connections tab

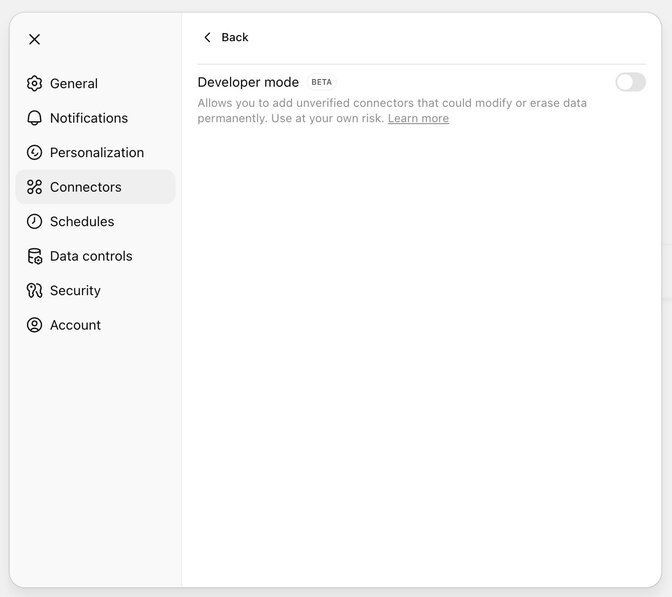

- Enable Developer Mode

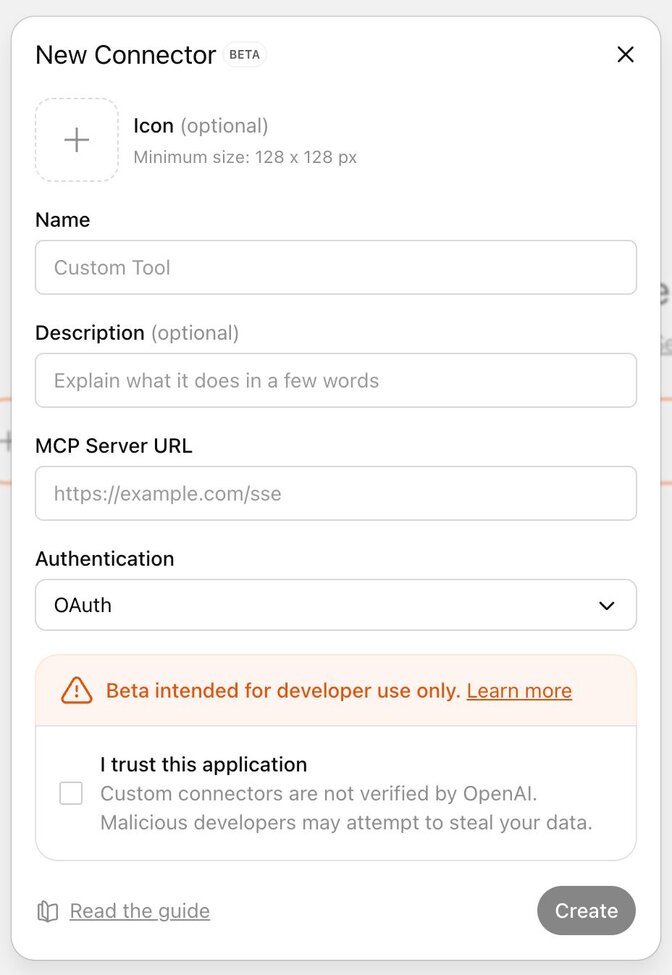

- Register your new MCP server URL

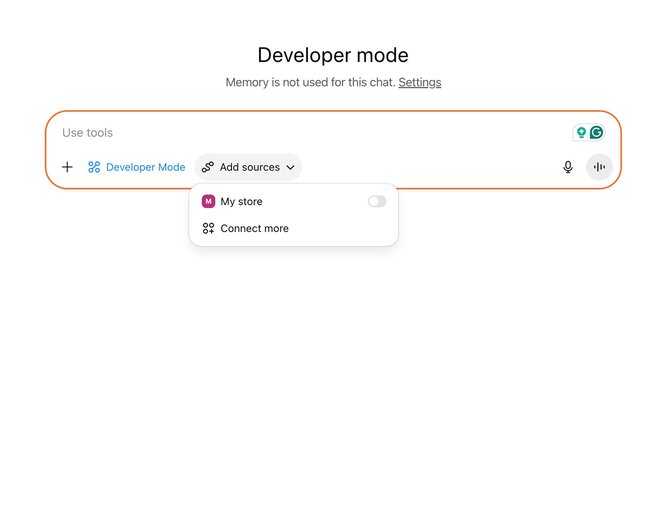

Once registered, your MCP tools appear directly inside ChatGPT. From there, you can search, fetch, and query products from Saleor using natural language.

Tips, Tricks and Warnings

Through this implementation, we learned:

- Keep it simple: flatten pagination where possible; assistants struggle with cursor-based logic.

- Schema quality matters: clean, typed GraphQL schemas produce the best MCP results.

- Watch the scale: the “fetch all products” example works for catalogs under ~1000 items, but larger setups require more careful paging.

- Test before connecting: Postman now supports MCP requests, making it a perfect validation tool before exposing endpoints to AI.

- Deploy smart: use FastMCP Cloud for easy hosting and URL registration, but ensure API security is not overlooked.

Practical Payoffs

- Zero boilerplate: your schema = your API definition.

- Type-safe: Pydantic ensures correctness.

- Async-ready: perfect for real-time AI interactions.

- Connector-ready: instantly usable in ChatGPT today.

- Fast to market: from schema to AI tools in hours, not weeks.

What It Unlocks for Your Business

Imagine your sales team, customer support, or even clients directly querying your product catalog in natural language — powered by your existing GraphQL API, no new frontend required.

Instead of expensive UI projects or brittle plugins, you turn your current schema into the control panel for AI assistants.

Summary

This approach shows how an existing GraphQL schema can be transformed into an AI-ready MCP server — quickly, safely, and without reinventing your architecture. Along the way, we’ve seen:

- Why MCP matters now

- What business problems it solves

- How we implemented it in practice

- The tips and caveats that matter

- Where to go next if you want to try it yourself

With these tools, your API becomes more than just an integration layer — it becomes a direct interface for AI assistants.

Want to make your APIs AI-ready?

We’ll show you how to transform your GraphQL schemas into MCP servers in days, not months.