We’ve been watching the rise of AI coding tools with a mix of curiosity and healthy skepticism. One idea that sounded especially promising was connecting a Figma design system directly to a Cursor AI code editor, so it could generate UI that actually matches the design system.

So I tried exactly that: I wired up a Figma design system to Cursor via MCP and asked it to build UI from our components.

Short version: it worked… but only up to a point. Beyond that point, things got weird.

This article is a quick field report: what I did, what worked, what broke, and what I’d need to see before recommending this workflow in a production setting.

Experiment Setup and Constraints

I easily did Figma-Cursor integration as it’s a simple process that doesn’t involve writing any custom scripts. You just configure native options in both tools.

Now I needed a source of truth for the assistant to read from. I could have used a commercial design system we maintain at Mirumee, but for test purposes, I decided against a large, living organism for a few reasons:

- Feature drift in mature files: Our existing system doesn’t fully adopt every brand-new Figma feature. Updating them just for an experiment would be costly.

- Human-first conventions: A design system used in a classic, non-AI workflow carries conventions (naming, component scaling, instance swaps) tailored to human designers. This doesn’t necessarily make parsing easier for an LLM.

- Scale gets in the way: Huge token, typography, and color sets increase ambiguity and make outputs harder to control.

- Easier debugging: A small, fresh system gives complete context and faster troubleshooting.

With those constraints in mind, I created a compact design system and asked Cursor to generate UI against this test environment.

What Actually Worked

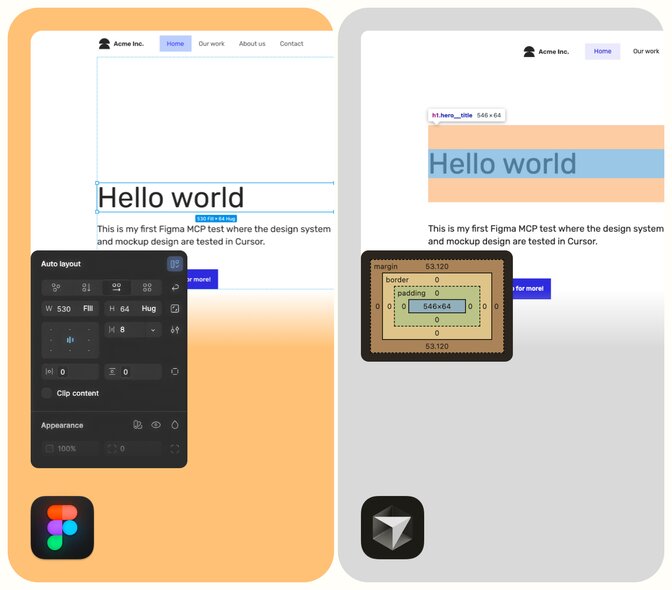

Cursor AI picked up the basic layout concept and referenced the design system in a way that was directionally correct. The assistant understood the general structure and produced a reasonable starting point.

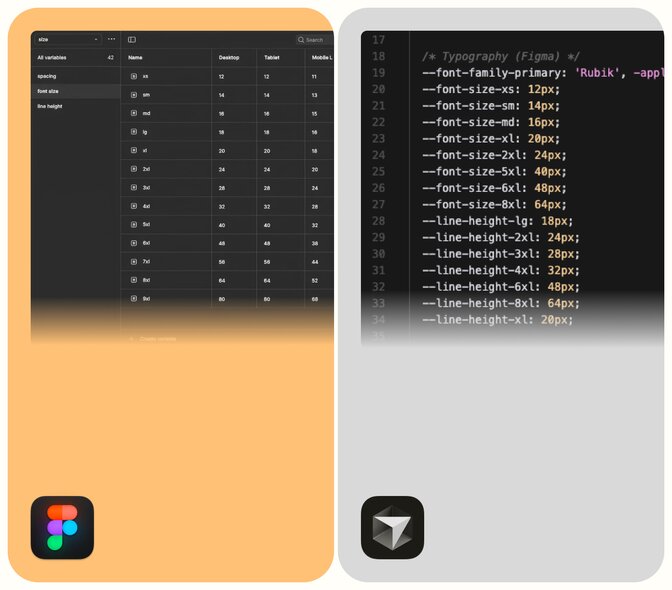

At first glance, the tokens in Figma and Cursor design-tokens.css even looked identical, which was promising.

However, it turned out that the output did not meet the expectations. To tighten things up, I rewrote the prompts to include a button component with its color variables, all the components for a landing page, etc. Most importantly, I specified what it should not do. This didn’t help with the final result, though.

Where Things Fell Apart

1. "Creative” Styling Outside the Design System

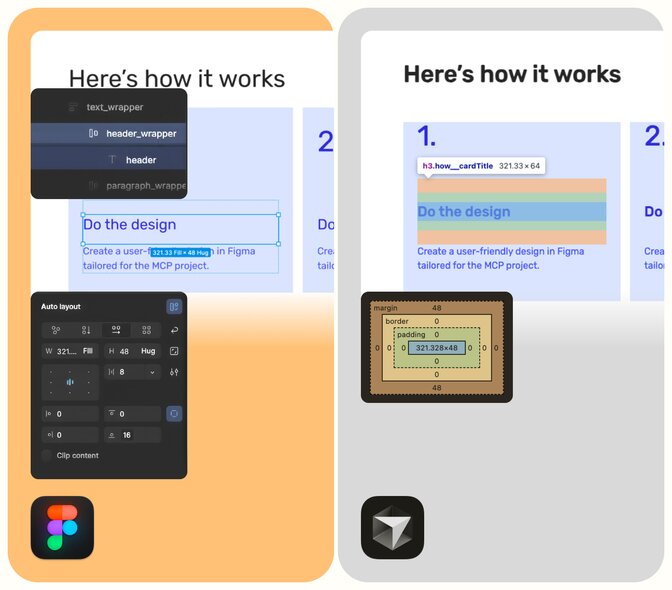

Despite having access to the design system, Cursor regularly decided to improvise. Even when I told it not to invent new styles, it still tended to slip in “helpful” adjustments that broke visual consistency.

From a designer’s perspective, this is a dealbreaker. The whole point of connecting Figma is to reduce drift, not battle a second opinion baked into the AI.

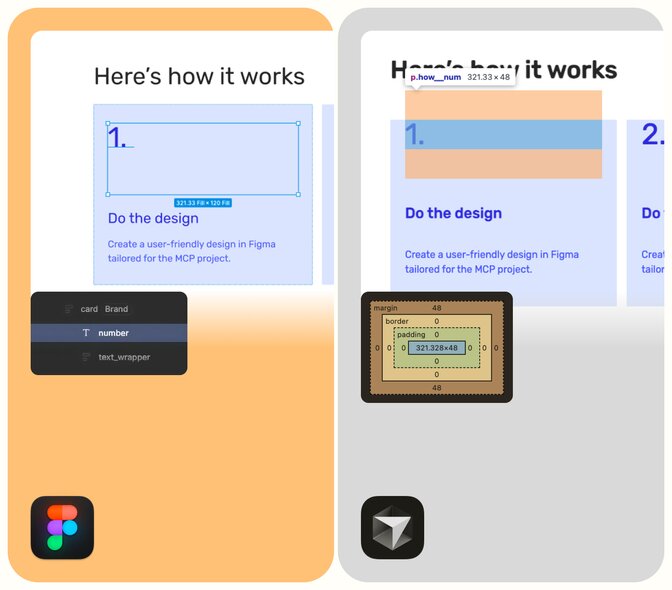

2. Components Without Reliable Variants

I expected Cursor to use the base button component and respect the defined variants. Instead, some variants were implemented, and others were ignored.

It also missed specific details and occasionally combined properties in ways that didn’t match any variant in the design system.

3. Adding Extra Margins

Cursor insisted on adding extra spacing and margins around headers, which didn’t come from any tokens at all.

You can work around this by repeatedly correcting it or explicitly listing rules like:

- Do not add margin-top or margin-bottom to headings.

- Do not add any outer margin to the container.

- Only use spacing tokens X, Y, Z.

But at that point, it starts to feel like you’re reverse-engineering the assistant rather than getting help from it.

Conclusions

Connecting a Figma design system to Cursor felt like it should be a breakthrough: a single source of truth driving both design and code, with AI filling in the boring parts.

In practice, I found it’s not quite there yet.

Is it just a prompting problem? The honest answer: partly, yes. I’m almost certain the prompts weren’t perfect. With more time, a stricter prompt template, and a comprehensive “playbook” for guiding the assistant, I could probably get better results.

But that’s also the point. If we have to:

- Constantly remind the tool not to change fonts or spacing,

- List out everything it’s not allowed to do,

- Double-check every small detail against Figma,

then the value proposition starts to erode. At that stage, it’s faster to write the components yourself and use AI for smaller, well-bounded tasks (like generating tests or wiring up state logic).